|

1’16”

|

“AI presently exists in immobilized form…”

Excerpt of the video presentation of Vivarium, a project by Will Freudenheim, Christina Lu, and Dalena Tran, available at https://vivarium.host/.

As we will see in detail further on in this episode, Vivarium is a speculative ecosystem of ‘toy worlds’, or “gamified simulations in which different human-AI configurations scaffold complex embodied intelligence”. We consider Vivarium as an example and possible entry point - certainly one among many - into new approaches to notions such as human autonomy (subject v. environment), human intelligence (nature v. artificial), the public space, and even democracy, in an era where technology seems to outpace - and often push to their limits - the models inherited from Western modernity.

|

|

4’04”

|

“Recently I was at this program called Antikythera…”

Antikythera is a design research think tank whose goal is “reorienting planetary computation as a philosophical, technological, and geopolitical force”. Antikythera was founded in 2022 and is directed by philosopher of technology Benjamin Bratton. The think tank produces design prototypes and scenarios through collaborations with foundations, think tanks, companies, and civil society organizations. Antikythera is incubated at the Berggruen Institute, a non-profit organization with offices in Los Angeles, Beijing, and Venice.

|

|

8’54”

|

“I did kind of work in the AI fairness field and I think I might have drifted a bit from the main kind of rhetoric…”

Issues of equality and fairness in the field of AI have been debated for a few years now thanks to the objections raised by scholars and critical technologists as well as, in some specific occasions, by researchers working for the few companies that were developing these systems. The case of face recognition was particularly relevant, but similar debates focused on algorithmic filtering of information on social media or the use of algorithmic techniques in policing, education, insurance, and many other fields.

Among the several research materials available on the topic of AI ethics and fairness that were produced between 2015 and 2020, we could mention the Algorithmic Justice League’s Research Library, Automating Inequality: How High-Tech Tools Profile, Police, and Punish the Poor by Virginia Eubanks, Weapons of Math Destruction: How Big Data Increases Inequality and Threatens Democracy by Cathy O'Neil, Algorithms of Oppression by Safiya Umoja Noble, Algorithmic Accountability: A Primer by Robyn Caplan, Joan Donovan, Lauren Hanson, and Jeanna Matthews, just to name a few.

As the issue of fairness and trustworthiness permeated quickly in the corporate field due to public pressure, technologists, social researchers, journalists, activists, and artists started to scrutinize the application of principles of justice in the complex technological field of big data and AI. Sometimes this required also a social and cultural assessment of the notion of justice when applied to sociotechnical devices, which drove towards broader definitions of the problem itself. As an example, AI Now launched the A New AI Lexicon series and the Data & Society institute gradually shifted their attention from big data towards the vast field of digital automation.

It’s worth noting that critical technologists and artists were often pioneering in the research on the technocultural blind spots of machine learning. David Harvey’s Exposing AI, Trevor Paglen and Kate Crawford’s Excavating AI, Vladan Joler and Kate Crawford’s Anatomy of an AI System or Mimi Ọnụọha’s The Library of Missing Datasets are relevant examples of projects that summarize independent research work carried out since the early decade of 2010s.

By the way, the issue of fairness in AI was tackled by Vladan Joler in the previous episode of our podcast - “Statistical Truths (A Genealogy)” - in which he questioned the fairness of current “intelligent systems” on a material and historical basis, but also alerted that applying the notion of ethics to AI is slippery ground, both from a technical and a political perspective.

|

|

10’09”

|

“There's a process called RLHF…”

Reinforcement learning from human feedback (RLHF) is a machine learning technique that uses human feedback to optimize ML models. Its purpose is to perform tasks more ‘aligned’ with ‘human’ goals, wants, and needs.

Reinforcement learning techniques train software to make decisions that maximize rewards, making their outcomes more accurate. Given a set of multiple responses from the model answering the same prompt, humans can indicate their preference regarding the quality of each response, so that RLHF incorporates human feedback in the rewards function.

In other words, RLHF does not affect the main architecture of a large model, nor it is able to purge or improve its dataset, but rather contributes to “mask” some of its output.

For a basic introduction to RLHF, check the Wikipedia entry and articles like this one on the Hugging Face community blog.

|

|

12’39”

|

“I did this with two collaborators, Dalena Tran and Will Freudenheim”

Dalena Tran is an artist and filmmaker based in Los Angeles. Her work takes shape between word, moving image, installation, live performance, sculpture, sound, and software. Engaging various media forms, she investigates the everyday confluences of language and expression; presence and immateriality; voyeurism and surveillance; urbanism and hegemony; play and pause. https://dalena.me/

Will Freudenheim is an artist, game designer and researcher based in New York.

He builds interactive systems for collective knowledge construction, animation, and live performance using real-time 3D engines. https://freudenheim.info/

|

|

14’18”

|

“They will train these kinds of AI agents in what are called toy worlds.”

From the introduction to Vivarium:

“Research labs like DeepMind overcome these constraints by training agents in simulated environments to perform actions through reinforcement learning. These controlled environments are toy worlds: simulations in which reality is abstracted into a relevant domain for training AI agents.

“Toy worlds allow for quick iteration, broad proliferation, and streamlined data synthesis in pursuit of embodied intelligence. They output agents trained in situ or recorded data of their behavior. Some toy worlds also contain humans, providing rich interactive data between organic and synthetic forms.”

(Source)

|

|

14’40”

|

“They try to do what is called the Sim2Real transfer”

Frame of Vivarium’s introduction video, 2023

“After an agent has been trained in simulation, its capabilities can be transferred to the real, but this transfer is not a given. Called the Sim2Real gap, the simulation may either incorrectly model actual physics or fail to capture the indeterminacies of the real.”

(Source)

|

|

16’48”

|

“Learned intelligence should be collectivized”

“To bridge [the Sim2Real] gap, learned intelligence should be collectivized to amass the diverse data necessary for generalization, yet embodied AI research is currently conducted in isolated circumstances. Incompatible libraries hinder the accumulation of shared skills; there is a dearth of modular assets, universal protocols, or capability scaffolding.”

(Source)

|

|

19’21”

|

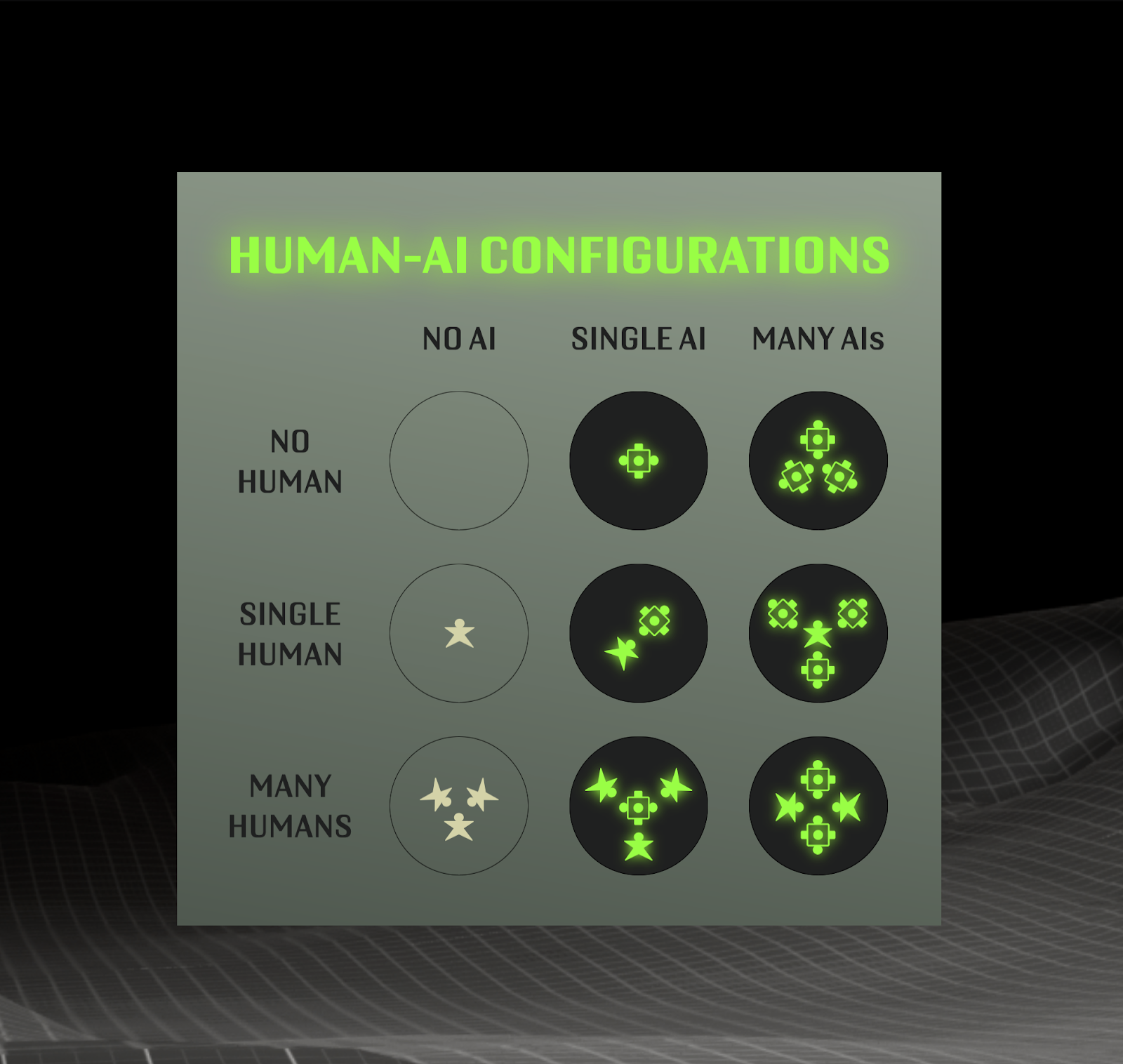

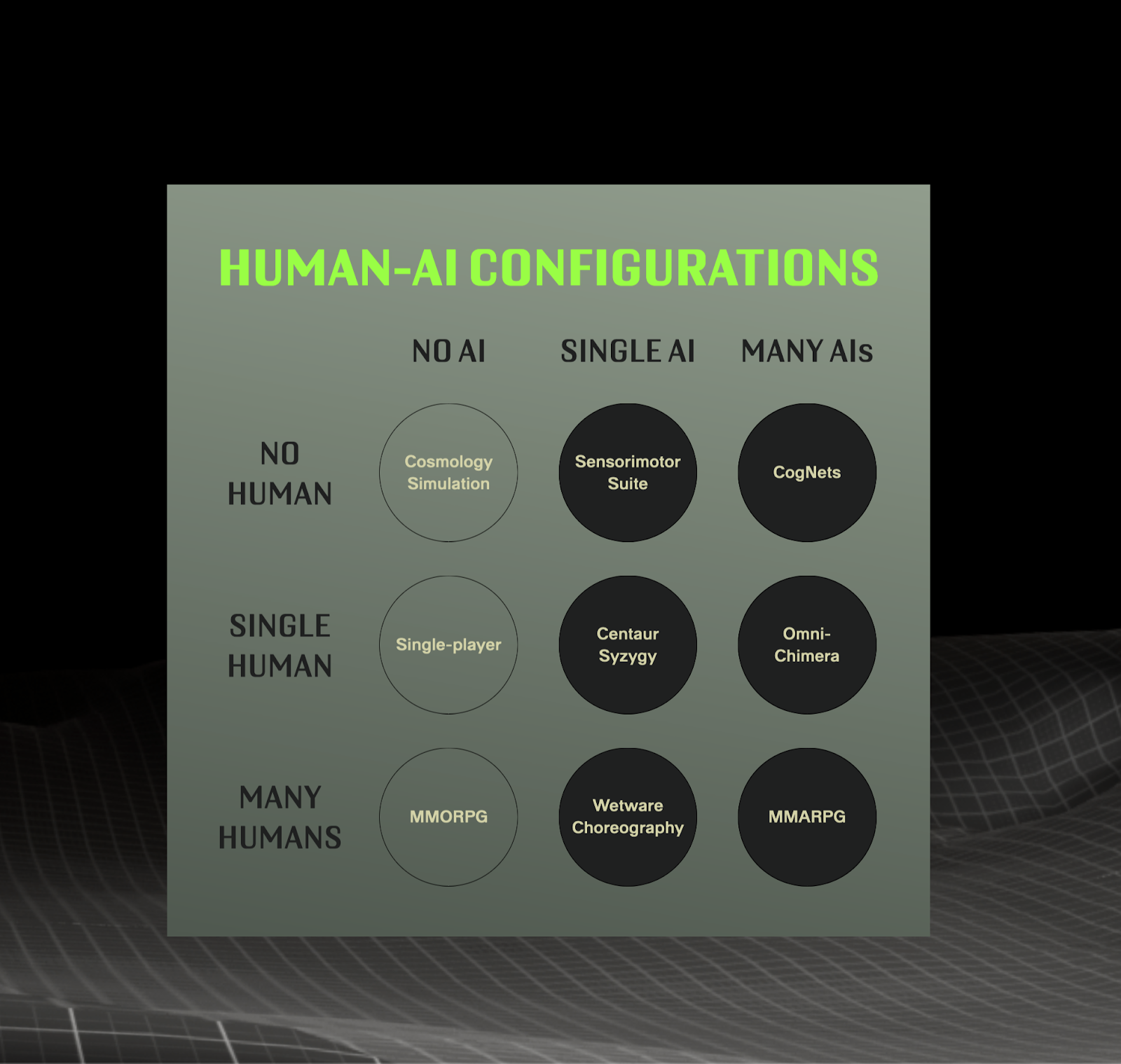

“A matrix of human AI configurations”

Vivarium’s 3x3 matrix of human-AI configurations

Within the space of the matrix, the first column exemplifies possible configurations of humans with no direct presence of AI, from cosmologies (no humans), up to existing human gameworlds (many humans). The rest of the positions define possible scenarios where different configurations of humans and AI agents are taken into account.

|

|

20’09”

|

“So in a single human / single AI world, you have what might be called a centaur pairing.”

The interactions [between embodies AI and humans] are facilitated in centaur syzygies, or close unions, where a player and an agent are tightly coupled together. [...]

A common paradigm on Vivarium sees players control an agent through remote sensing and top-down instruction. Within toy worlds, which allow flexible iteration yet maintain safety, humans trial offloading sensing, cognition, and labor to AI. [...]

Particular worlds invert expected paradigms of human executive control by granting the agent powerful sensing and decision-making faculties, reducing the human to physical appendage. In others, the pairing shares an interface such as a machinic exoskeleton and negotiates shared control over it.”

(Source: https://vivarium.host/)

|

|

22’00”

|

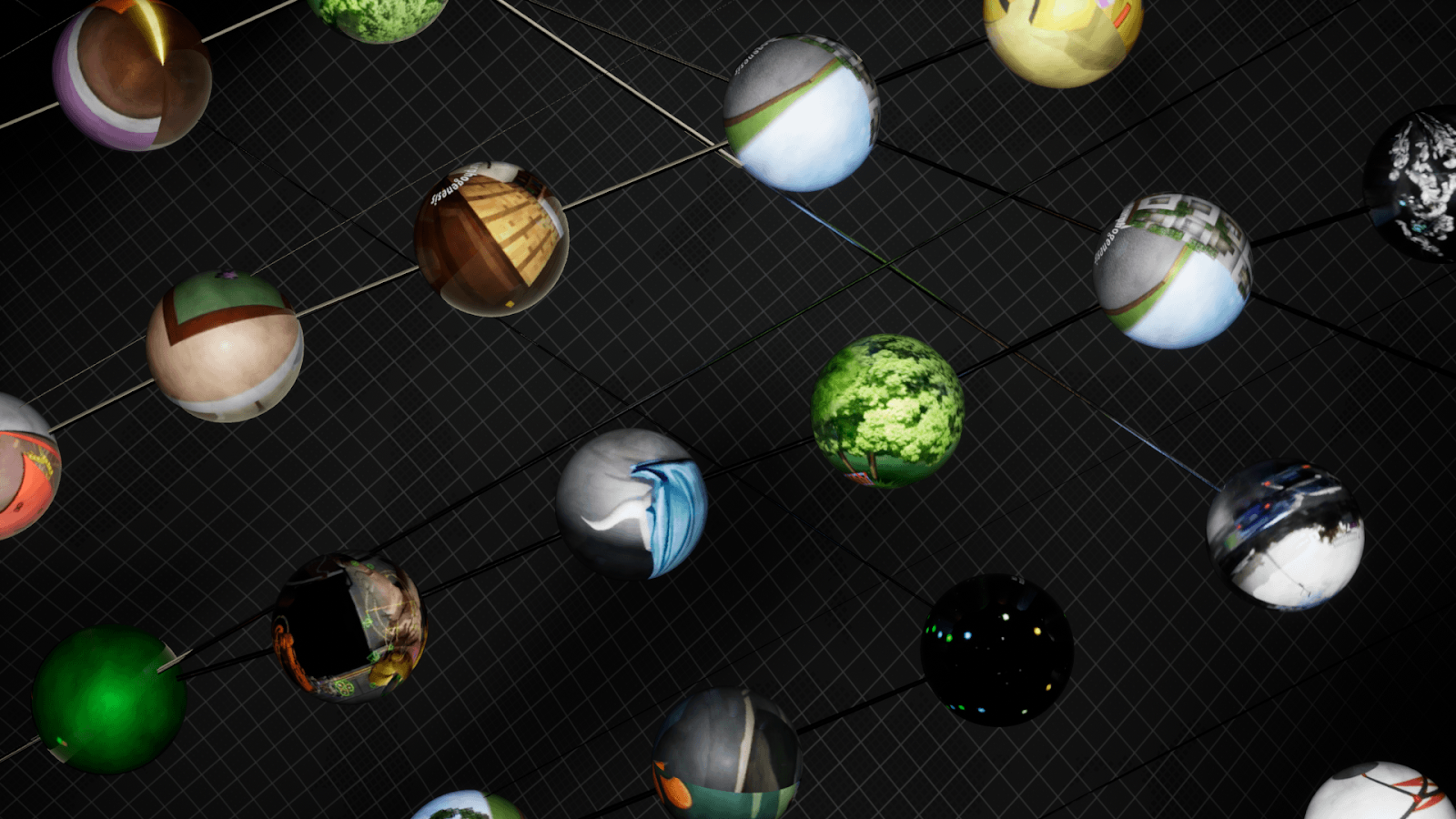

“The toy worlds that would have many AIs but no humans in it.”

“Once agents are equipped with individual motor skills, they practice multi-agent coordination as cognitive networks, or CogNets, within toy worlds populated by many AIs. [...]

“Tasks can include monitoring endangered species across large areas or assembling industrial machinery in close quarters. Swarms learn to distribute cognition and communicate. Some have members equipped with different senses, requiring creative signaling to span different umwelts. Other worlds inhibit inter-agent communication entirely, forcing stigmergic coordination.

“Over time, CogNets appear to behave as singular organisms. Agents operate at different scales; larger agents may even have “organs” composed of smaller ones. This nesting of cognition makes intelligence scaffolding and abiotic evolution possible, as agents recompose themselves of intelligent parts. These toy worlds lay the foundation for a planet populated by many minds at many scales.”

(Source)

|

|

22’28”

|

“It'd be almost like a school of fish of AI in the ocean”

The collective behavior of swarms of insects, flocks of birds, colonies of insects, schools of fishes has always caught human attention but only in the last half a century has been studied as a case of system behavior in terms of emergent properties such as costs and benefits of group membership, the transfer of information, decision-making processes including feeding and coordinated movement or synchronization.

These emerging properties can be associated with forms of cognition beyond body boundaries like stigmergic coordination, which emerges when members of the group ‘talk’ to other members by marking the environment in such a way that other members will sense it and act accordingly. Swarm intelligence arises when the behavior of individuals results in coordination even if each individual is aware of only a minimum part of the whole available information (which is unknown to each individual member of the group) and without a centralized control structure dictating how individuals should act.

In the last four decades scholars and researchers in the fields of system thinking, mathematics and computer science have tried to systematize and mimic these biological collective behaviors. At the same time, the notion of swarm behavior deeply influenced other fields, too. For instance, in 2000 John Arquilla and David Ronfeldt wrote up an influential report for RAND, titled Swarming and the Future of Conflict, which could also be considered a follow-up of two other seminal essays about the connections between networked intelligence and contemporary war and low-intensity conflict scenarios: Cyberwar is Coming! (1993) and The Advent Of Netwar (1996).

In the case of Vivarium, the scenario where CogNets act in the simulated world without humans is a case of swarm intelligence in which, contrary to animal swarm behavior (as far as we know it), the immediate transfer of complete sensory information is possible from one agent to all others. As Christine Lu explains, this does not necessarily imply a centralized control structure but rather opens up the possibility of specialization and intelligence “scaffolding”.

|

|

28’41”

|

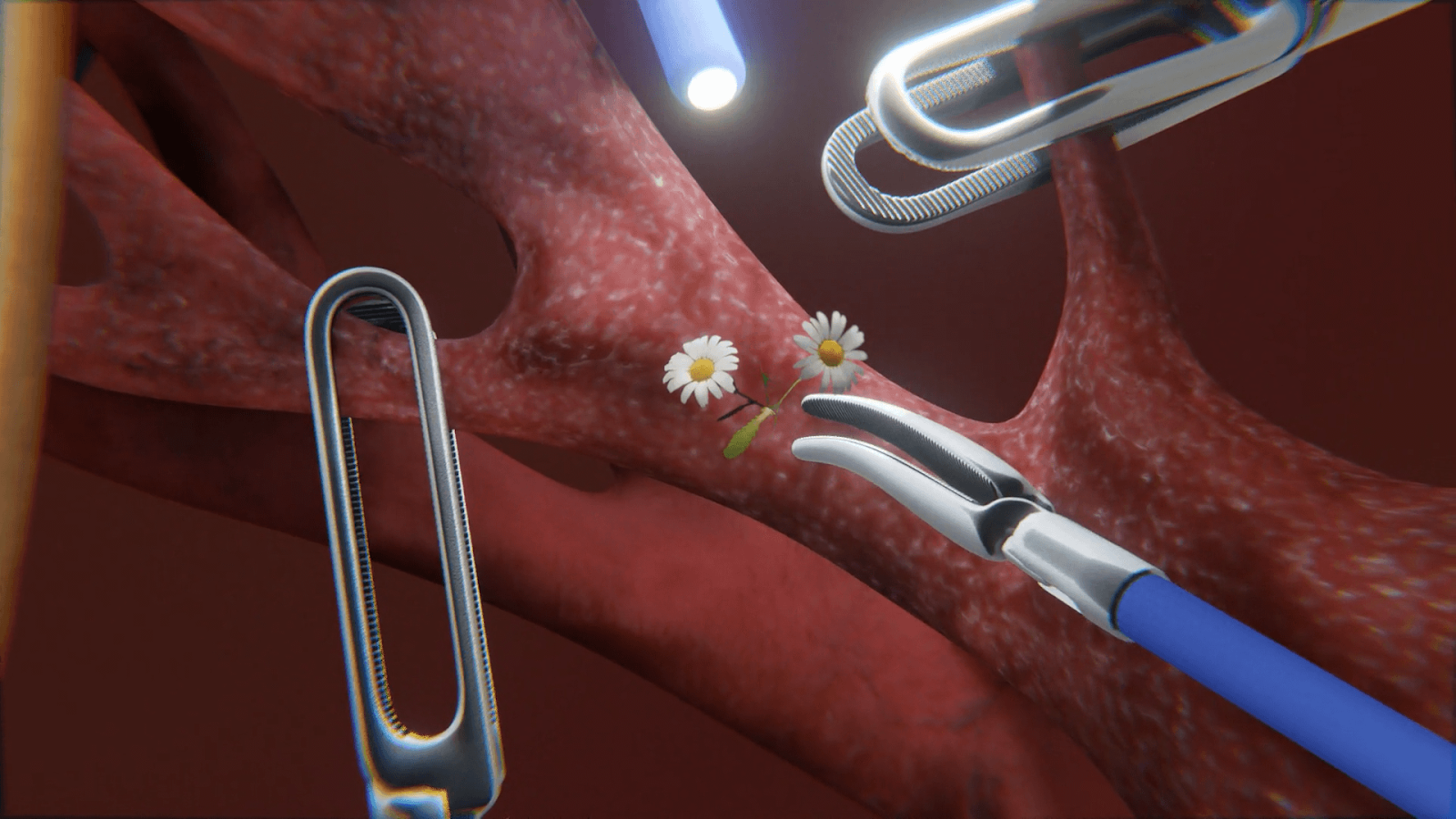

“Through continued training as omni-chimeras, human commands become less rigid as agents fulfill tasks with more more abstract specifications”

“Omnidirectional chimeras, comprising a single human and many AI agents, synthesize skills acquired from CogNets and centaur syzygies. Humans operate at a higher level of physical abstraction within them; rather than provide close input to a single agent, they commandeer many.

“Common scenarios have the human dispatching agents equipped with different skills for a complex task, such as operating massive vehicles or performing surgery. The agents must coordinate with one another while integrating high-level commands.”

(Source)

|

|

31’09”

|

“Wetware Choreography is a world in which a single AI is juggling a bunch of humans”

“The agent integrates multiple streams of noisy human input, prioritizes signals across them, and ultimately instructs players to accomplish tasks. [...] Within these toy worlds, agents learn a complex topology of human sociality, coordination, and control.”

|

|

34’20”

|

“...there's this concept of kit bashing”

Vivarium is conceived as an asset store, a simulation engine, and a toy world library. “Vivarium’s universe of toy worlds contains diverse scenarios that generate a broad spectrum of training data, while its centralized marketplace invites the broad participation necessary to make this data meaningful.” The asset store includes physically detailed objects, sensory packs and procedurally-generated layouts.

The authors give a few examples of possible Assets:

Mushroom foraging suite

Mystical Forest Environment

Aerial Ability Pack

Internal Body Map for surgery

Hydrogen bonding

Winged musculature

Heat detection

Dog awareness

|

|

35’18”

|

“Easing the barrier to entry of what it takes to build both a toy world and also the agent itself.”

Vivarium’s toy world library and its asset store are designed to be open and interoperable by design as to encourage participation, diversity, and shared knowledge. But is this design solid enough to be the basis of democracy “in a future where inter- and intra-human-AI communication amalgamates into a complex web of distributed embodied cognition” (as the authors of Vivarium describe it)?

In other words, what kind of policies would run both the framework and the toy worlds themselves? What kind of public space would the asset store foster or even become? Or: how would AIs learning in real time require - or even force - the adjustment of existing policies? What kind of instruments or protocols of negotiation will be in place then? How will they manage disagreement, frictions, and even conflict?

These and many other questions are going to be unavoidable if embodied cognition, such as in the speculative framework of Vivarium, will become the basis of a new global infrastructure of planetary computation that is going to be able to redefine logistics, economics, science, as well as politics and culture.

|

|

35’44”

|

“This is leading us to the final slot in the matrix”

Again, from Vivarium’s introduction:

“Many AIs interact with many humans within massively multi-agent roleplaying games, or MMARPGs. This configuration is the endpoint of possible toy worlds. [...]

To reach this quadrant, each of the previous ones must build upon another:

- Sensorimotor suites lay the groundwork, training a single agent to move through the world.

- CogNets extend individual capability towards inter-agent coordination and competition.

- Centaur syzygies introduce humans to the mix, teaching both parties the optimal distribution of physical capacity.

- Omni-chimeras further abstract the level of human instruction for many agents.

- Wetware choreography inverts that paradigm, training single agents to arbitrate many humans.

|

| |

|

Medialab-Matadero Madrid

Medialab-Matadero Madrid