Neural Synthesis

Workshop: Real-time audio style-transfer and synthesis using neural networks

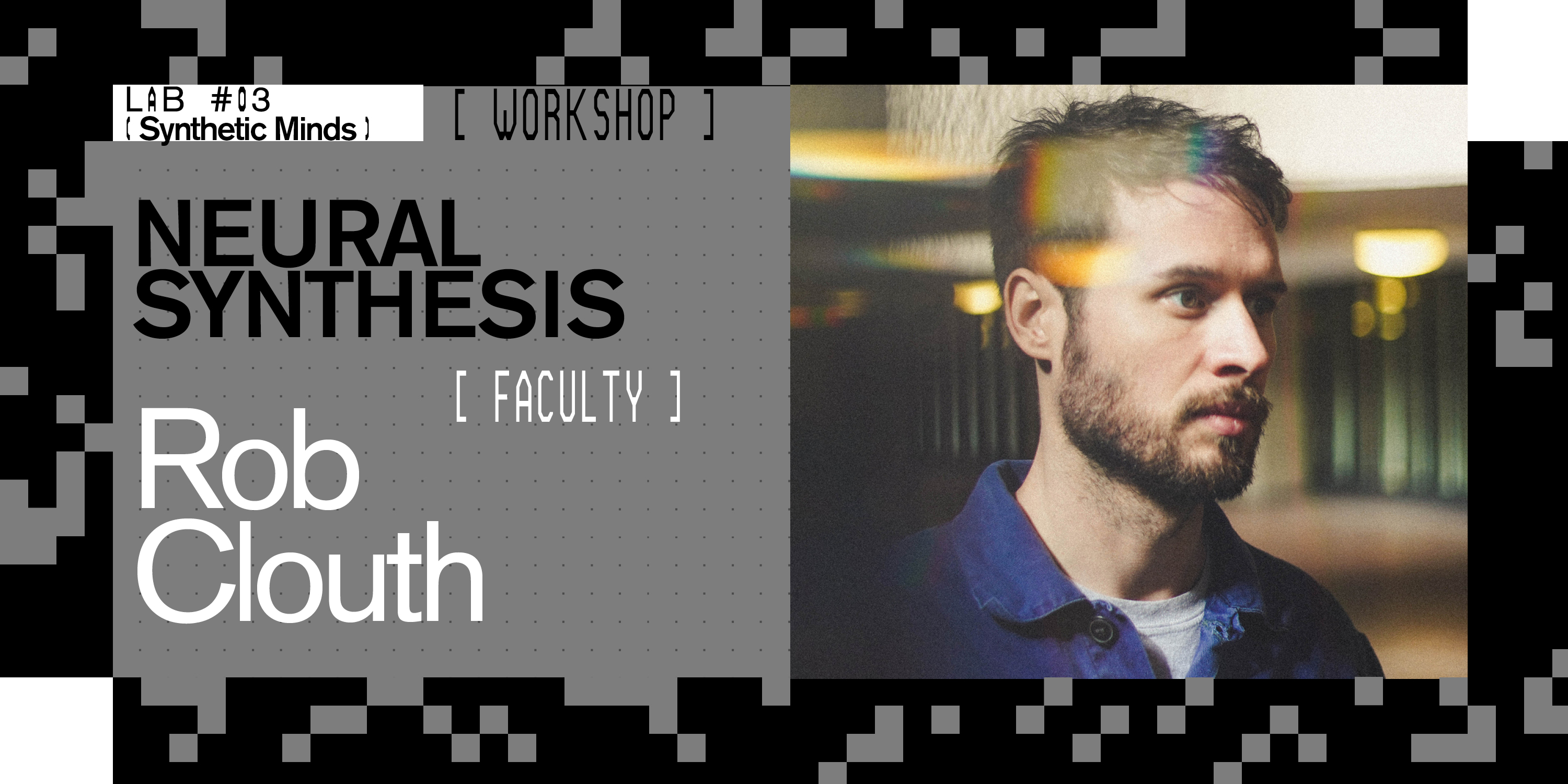

Rob Clouth is a British musician based in Madrid, who uses machine-learning techniques to do real-time style transfer of audio, specifically his voice, combining the natural flow of improvised beatboxing with the sound quality and realism of designed samples.

As a multidisciplinary artist working in the fields of electronic music, software engineering and new media, Rob Clouth's motivations include searching for raw emotion amongst intricate musical structures, developing experimental audio software solutions and rethinking the ways in how we interact with music and technology.

For his workshop Neural Synthesis, Rob has divided it into two parts, aiming to provide attendees with the knowledge and skills to generate high-quality audio using neural networks in real-time. In the first part, participants will learn about the application of state-of-the-art neural networks for synthesizing audio in real-time. They will also be introduced to techniques for collecting data sets in a fair manner. Additionally, the workshop will guide attendees on utilizing Google Colab, a platform that allows them to train models free of charge. To allow time for the training process, the second part of the workshop will take place two weeks later. During this session, participants will be taught how to use the trained models to process and generate sounds in the participant’s DAW of preference (Ableton Live preferred). This comprehensive workshop equips attendees with the necessary skills to harness neural networks for real-time audio generation and empowers them to unleash their creativity in the realm of audio synthesis.

The workshop won’t require any coding as Rob will provide the scripts for training. However literacy with google drive, knowledge of audio production software and an interest in music and sound is essential. Participants should bring their own laptops, headphones and a DAW.

Participants will be encouraged to include The collected data sets and trained models produced in the workshop in an open source repository of AI models, which will be then used used by Rob at the Open LAB#03. At the end of the workshop Rob commits to deliver an open source library of generative audio models trained on different sound categories that will be available online until at least 29 February 2024. The library will contain at least 20 models trained by Rob and those developed by the workshop participants.

This workshop will be delivered in English.

Medialab-Matadero Madrid

Medialab-Matadero Madrid